There is something to be said for being in control of your car from instant to instant. First, there is a certain pleasure for most of us in choosing, owning and driving our own vehicle. Our constant attention to the road, its condition, and other vehicles’ behavior is required, so that surprises are minimized and dangerous situations are more readily anticipated and responded to. On the other hand, there are those visionaries who dream of getting into a yet-to-be-developed vehicle that will transport them from their suburban driveway to a parking garage in downtown Manhattan without their needing to touch the steering wheel or depress the gas or brake pedals.

Serious experimentation is underway by Google, Tesla Motors and others to at least approximate this objective-the driverless or “self-driving” car. Optimists cite the successful automated guideway transit (AGT) systems as a rationale for their high expectations for the self-driving car. Yet there are important differences.

AGT systems can be based on traditional running rails serving as the guideway, or the guideway can be separate from the running surface, linked via a slide running along the guideway surface to steer the running wheels. A non-physical “virtual” guideway, detected by sensors on the vehicle, may also be used.

How They Differ

There are major differences between AGT systems and self-driving (autonomous) vehicles that may dampen the enthusiasm of self-driving proponents. First, for the self-driving car there is no physical guideway. If a “guideway” can be construed, it is not fixed, but dependent on external signals, which are subject to change and may sometimes be missing or erroneous.

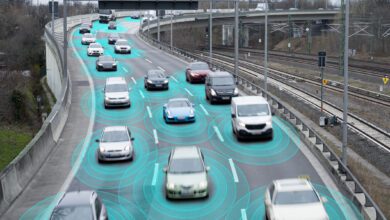

In urban high-traffic areas, self-driving cars would be sharing the roads with conventional owner-driven vehicles, commercial vehicles, and emergency vehicles. Lane changes would be required at frequent and unpredictable times. Many AGT installations are point to point, as at airports to transport passengers from remote parking areas. Thus they do not encounter the sharp turns and complex routing encountered by self-driving cars.

Automated Subway and Skyway Systems

Several automated urban transit systems are also viewed as positive models by advocates of self-driving cars. Among those that can operate completely automatically are the Paris Metro, the Rome Metro, and the Budapest Metro. Onboard personnel may respond to certain passenger requests, but are not involved in obstacle detection, speed control, door operation, or emergency response.

The San Francisco Bay Area Rapid Transit (BART) system that began operations in 1972 does not quite meet the fully automatic definition. Originally intended to operate its trains without human intervention of any kind, that did not happen-perhaps due in part to an accident in which a two-car train under automatic control ran off the end of the line at Fremont. Staff on board now operate the doors but normally do not control operation of the train itself.

Glitches and Hurdles

Google began road tests of its self-driving Lexus and Prius vehicles in 2009. Beginning in May 2010, these autonomous vehicles were involved in 16 accidents. Most of them occurred in downtown Mountain View, and all were caused by humans, not by the autonomous cars themselves, according to the California DMV. In one case, a Google Lexus was braking for a traffic light and the car behind it rear-ended it at 17 mph.

AGT systems, unlike those for self-driving cars, are designer-controlled and self-contained. But the latter include road signs, lane markings, road damage, etc. When Tesla Motors tested its “autopilot,” designed to adjust speeds, change lanes, and steer automatically, it found the system unable to identify the correct lane on a stretch of the freeway near LAX because of poor lane designation. Infrastructure improvements that might permit successful testing of some self-driving features are notably costly and hard to obtain. (I drive daily past the exit of the local high school on a two-way road whose once prominent center line has been completely worn away. My notification of the town highway superintendent of this fault has been disregarded for weeks. I am hoping that new student and faculty drivers unfamiliar with the road will not stray into oncoming traffic. Yet a self-driving car exiting the high school lot might easily find itself in the wrong lane.)

The “guideway” for self-driving cars is based on pre-programmed route data, so temporary or recently installed traffic lights are ignored. Complex intersections may be problematic, causing the car to switch to a super-cautious mode and, presumably, inviting the real driver to take over. The route cannot be identified in snow or icy conditions. Unexpected but harmless debris on the road may cause the car to veer unnecessarily and without warning. Certain serious obstacles, like potholes, may not be detected. A police officer or emergency worker signaling the car to stop may not be discerned using the present technology. Other problems in a workable self-driving car system might relate to unexpected actors, including cyclists, pedestrians, and dogs and cats, not to mention standby drivers in the autonomous cars who are texting or drug-impaired.

Many critics of driverless vehicles are also concerned that it may be difficult or impossible for the automated system to make appropriate ethical decisions in complex emergency situations-as, for example, when converging on a stalled school bus, electing to brake and possibly ram it at a nominally low speed, as opposed to veering sharply off the road into a sheer rock face that would very likely critically injure the human “driver” of the automatic car.

Finally there is the potential competition between self-driving cars and real drivers, involving unexpected behavior by the self-driven car that might encourage road rage or at least provoke the inherent feeling of superiority on the part of us real drivers. As one noted online, “I say we are not ready for a fleet of Sunday drivers who actually obey the speed limit.”

A Reasoned Approach

Toyota recently entered the picture with a plan of its own. Its research, to be centered at the artificial intelligence laboratories of MIT and Stanford, will be focused on the goal of intelligent cars that augment but do not replace their drivers. They may adopt a limited number of the features that a totally self-sufficient driverless car might have, such as detecting a ball bouncing into the street and automatically braking. Unlike Google and Tesla, Toyota appears to support the role of keeping the driver in charge, but protected from serious accidents by computer intervention.

The cards appear stacked against the self-driving car because it must navigate an ill-defined, fluid environment that constitutes a far less reliable “guideway” than that of a fixed guideway system.

You may not share my skepticism, but on balance, I can’t believe our streets will ever be filled with self-driving cars. Nor do I think that when you acquire your first Aston Martin or Lamborghini that you’ll even briefly entertain the notion of letting it drive itself.

Your comments are welcome.

Resources

- Cruz, R. X., “The Rise of the Autonomous Car,” C&T, September/October 2015, pp. 40-41.

- Oremus, W., “The Big Problem With Self-Driving Cars.” Slate/Futuretense [retrieved 21 October 2015]

- Ackerman, E., “Google’s Autonomous Cars Are Smarter Than Ever…” [retrieved 22 October 2015]

- Hernandez, D., “All of the accidents self-driving cars have had . . .” https://fusion.net/story/212208/california-dmv-self-driving-car-accident-reports/ [retrieved 21 October 2015]

- Madrigal, A., “Google’s self-driving cars are smart, but can they beat Murphy’s Law?” https://fusion.net/story/206461/google-self-driving-robocar-crashes/ [retrieved 21 October 2015]

- Naughton, K., “What are the “ethics’ of self-driving cars?” Herald/Net [retrieved 21 October 2015]

- Lin, P., “The Ethics of Saving Lives with Autonomous Cars Are Far Murkier Than You Think,” Wired, 31 July 2015. https://wired.wired.com/opinion/2013/07/the-surprising-ethics-of-robot-cars/ [retrieved 21 October 2015]

- Stock, K., “The Problem with Self-Driving Cars: They Don’t Cry.” [retrieved 21 October 2015]

- Harsch, A. F., “Dry runs by computer,” IEEE Spectrum, September 1972.

- Friedlander, G. D., “BART’s hardware: from bolts to computer,” IEEE Spectrum, October 1972.

- Friedlander, G. D., “Bigger bugs in BART?,” IEEE Spectrum, March 1973.

- Friedlander, G. D., “A prescription for BART,” IEEE Spectrum, April 1973.

Note: The 2015 International Conference on Connected Vehicles and Expo (ICCVE) in Shenzhen, China, covered technology, policies, economics, and social implications of connected vehicles-including intelligent and autonomous vehicles-and cybersecurity in vehicles and transportation systems. The 2016 ICCVE is scheduled for 24-28 October in Seattle, Wash.

Donald Christiansen is the former editor and publisher of IEEE Spectrum and an independent publishing consultant. He is a Fellow of the IEEE.

I agree that self-driving cars, as popularly portrayed, are dubious, given the many exceptions to a clear road, most of which you describe well. Years ago, I envisioned a system that would allow autonomous operation of a car only after entering a high-speed road that had passed specific guideway standards which would be actively monitored and maintained. My system envisioned ad-hoc connection to a power and data “rail” at the edge of the roadway, but nevertheless, I think autonomous cars have a place on such trans-metropolitan and long-distance roadways. Of course, this will require appropriate roadway standards to be agreed by disparate commercial and governmental entities, which may prove to be the main impediment.

Meanwhile, we have intelligent cruise and creep controls, and lane-centering systems, that can take some of the tedium out of driving safely. The rest will follow in time.

sobering and thorough, thanks

In recent conversations with colleagues on this topic, the general consensus was that along the lines of the author, Mr. Christiansen, and comments by Mr. Gallea. One area I think is of concern is the legal system and how it will deal with accidents, injuries, fatalities, property damage, and such that might be caused by faulty autonomous systems. It is already bad enough with current cars and the number of safety recalls that cost the auto manufactures big money. It’s my opinion that this aspect could keep fully autonomous cars from ever making their way into our driveways. On a related note, as current and near future cars are adding more and more “safety” devices that sense objects in blind spots, do automatic braking, or provide other inputs to the driver, at what point is the common driver going to overloaded with added features or additional warnings on the dashboard or by computer voice that cause sensory overload and delayed or incorrect driver reactions to situations? This is in addition to cars now having a centralized “control/navigation/entertainment” systems to further distract drivers, not to mention all the other stuff (phones, texting, eating, …). My opinion is that the “common driver” will not migrate to a total autonomous car because of the complexities introduced, not trusting the computer, and not wanting the legal liability for the computer causing the accident.

When you reach MY age, your opinions about this will change with a resounding “bang”. . IMMOBILITY IS A CURSE ! ! !

.

Unexpected, random behavior will remain primarily the province of the human driver.

.

What is necessary is that ALL GUIDANCE be on the exclusive basis of VISUAL cues and that there shall be NO vehicle-to-vehicle communication which can, alas, be hacked — In the extreme, even vision can be hacked, of course — but the effort required is extreme.

.

GPS is for trip plan navigation, while radar, lidar, etc will simply prove to be nuisances.

Actually, there are some reasons to expect that automatons are likely to make better decisions, on average, in critical situations than people commonly do. For one thing, with decent computing power, the automatons usually can analyze more information from more sources faster than the human brain can. Second, they can access more kinds of sensory information from more sensory devices than the human can. Third, connected to distributed information networks, they can at least potentially have a much broader scope of “situational awareness” than the human can access and absorb.

There may well be a more difficult question than whether automatons can be trusted to make better decisions than humans do. That is: What happens when the automatons can and do? Serious problems are likely to arise when automated systems perform important tasks more accurately, more effectively, and more reliably than humans — most of the time.

For more on this, see my essay, “Can Robots Be Trusted?”: j.mp/LJP-RoboTrust