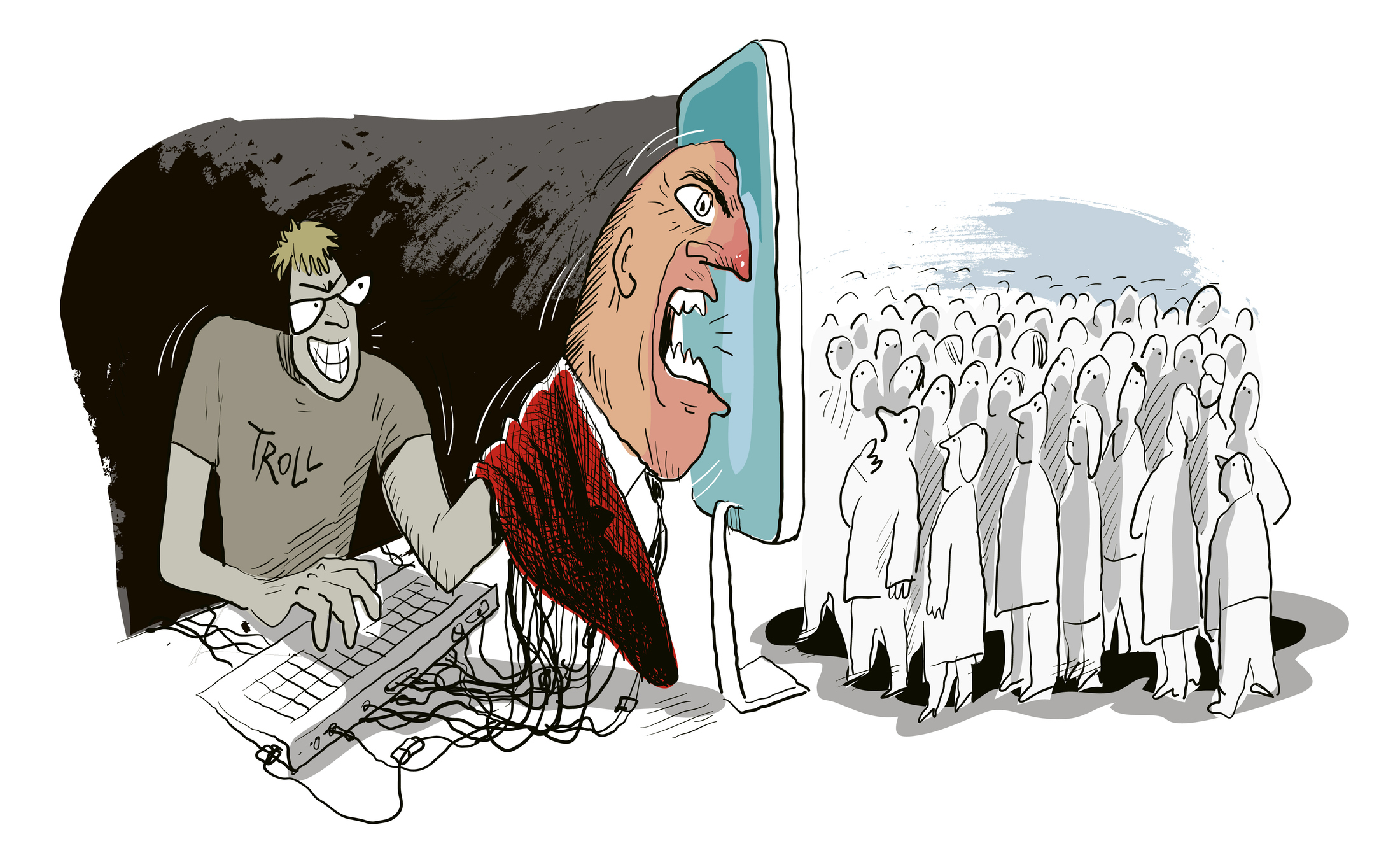

A Deepfake is synthetic content which is generated using existing data and a neural network to produce a convincing new representation. The relative believability of deepfakes, as opposed to synthetic content produced using traditional manipulation methods, makes deepfakes susceptible for misuse. Audio deepfakes have been used for scams or disinformation in many recent examples, including in a robocall mimicking U.S. President Biden’s voice, and disinformation spread by deepfake images once caused a plunge in the U.S. stock market. AI-generated nonconsensual pornographic content has targeted the public and celebrities alike. Philosopher Don Fallis has even determined deepfakes to be an epistemic threat, i.e., a threat to the nature of truth and knowledge itself.

The potential of deepfakes to efficiently scale scams and disinformation has alarmed technology and media companies into forming an initiative, the Coalition for Content Provenance and Authenticity, or C2PA, to establish authentication standards for content in its various forms. Examples of deepfakes being used to influence elections has spurred lawmakers, both in the U.S. and across the globe, to demand labeling of deepfake content. But how effectively can content be labeled and authenticated, and what are the limits of authentication?

Challenges for Deepfake Detection

Deepfake detectors are models which analyze a given piece of media for features which might indicate with some degree of statistical confidence that the media was created using a generative model. Advancements in generative methods render deepfake detectors vulnerable to evasion and poor performance across the gamut of datasets and models which can be used for creating synthetic media. In short, how to develop a universal deepfake detector for a given type of media is an open question.

Some generative methods leave unique noise or fingerprints in the images they create, which allows identifying a deepfake and also the method used to create it. Detectors which utilize physiological discrepancies, e.g., eye blinking or respiration, are promising for detecting both deepfake images and audio, but not all deepfakes includes physiological features to detect. Frame-to-frame spatio-temporal or audio-visual inconsistencies have proven useful in identifying deepfake videos.

However, these techniques cannot be applied to a single image deepfake. Meanwhile, AI generated text is notoriously difficult to detect. As such, methods to authenticate content are needed.

Current Limits to Authentication Technologies

The goal of content authentication is to actively embed media with markings or patterns which are imperceptible to human senses, or to define a methodology for keeping track of the provenance of media that is not AI generated. Blockchains are being explored for provenance tracking, but can be slow and cumbersome.

Pattern embedding, meanwhile, can be applied to non-synthetic media to indicate its authenticity, or to synthetic media during the generation process to indicate that it was produced by an AI. Traditional techniques used for copyright protection and authentication are often vulnerable to attacks from neural nets, and so new authentication methods need to be developed. Some authentication approaches embed metadata or credentials, which can be as small as 1-bit, into media, like a key for identifying the content owner, content subject matter, or creation method. Most recent research focuses on blind embedding methods using neural nets, as opposed to semi-blind or non-blind, as blind methods do not require the original media for authentication.

Watermarking is another authentication method besides metadata embedding, and it can be robust, semi-fragile or fragile. Robust watermarking methods embed watermarks which are resilient to manipulation, and so can be recovered in generated media. In contrast, semi-fragile watermarks lose integrity, or disappear altogether, when media is modified using generative methods, but these watermarks survive typical processing. Fragile watermarks can identify media modification by being irrecoverable even if a single bit of it was changed. Watermarking, to either original content or during AI synthesis, has been shown to be vulnerable to tampering or removal.

As such, some methods go on the offensive for retaining copyright and intellectual property. Generated images may also become easily identifiable as a deepfake if the training data for the generative method is “poisoned”, e.g., by using a tool like Nightshade or PhotoGuard, which enables authentication by causing the model to produce less reliable deepfakes. WaveFuzz and Venomave are examples of data poisoning tools for audio.

What’s Next for Content Authentication?

Given the landscape of generative technologies and the current limits to content authentication, protecting copyright or ascertaining authenticity is very challenging. Moreover, malicious actors cannot be expected to adhere to standards or laws for labeling AI generated content to indicate its synthetic nature. Layering protective measures will most likely result in robust and tamper-proof protection. In general, content creators should not be expected to apply protections, but rather protection should be applied during non-synthetic content creation by default.

Disclosure: my research is supported in part by the NSF Center for Hardware and Embedded Systems Security and Trust (CHEST) under Grant 1916762.