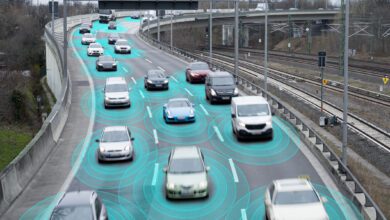

Using AI in computer vision and human-computer interactions will have far-reaching implications. AI-enabled robots will perform difficult and dangerous tasks that require human-like intelligence. Self-driving cars will revolutionize automobile transportation systems and reduce traffic fatalities. AI will improve quality of life through smart cities and decision support in healthcare, social services, criminal justice, and the environment. What does this mean for policymakers?

Just What Is Artificial Intelligence?

Artificial Intelligence (AI) — the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, learning, decision-making, and natural language processing — began in the early days of digital computers. Over the past seven decades, AI has experienced several hype cycles, with interest waxing and waning. At times, the technology delivered great promise, and at others, failed to meet expectations. However, improved AI reliability and effectiveness has increased positive public perceptions of, and interest in, the technology, and related applications of machine learning and autonomous robotic systems. Such recent successful AI demonstrations as, IBM’s Watson competing against a human Jeopardy champion and winning; Google besting a human champion in the ancient Chinese board game, Go; and the popular demonstrations of self-driving cars — have all contributed to renewed and increased focus on, and excitement about Artificial Intelligence.

AI applications now significantly and positively impact every aspect of American society, including national security and commerce. However, several notable personalities have recently expressed concerns about uncontrolled and unregulated artificial intelligence development. Ill-informed ideas and comments abound about AI taking over the world, killer robots and privacy violations. The resulting unnecessary panic could lead to bad policymaking based more in Hollywood science fiction than on AI’s real capabilities.

To ensure public acceptance, and confidence in increased AI technologies’ use and safety, it is important that public policies and government regulations enhance safety, privacy, intellectual property rights, and cybersecurity. Insufficient regulatory oversight, or failure to legislatively address the potential societal and cultural impact of AI as a fast-moving, emerging technology — could result in high-profile controversies related to critical technological failures. Such events potentially could cause policymaking that unwisely stifles entire industries, or supports regulations that do not effectively protect the public. To begin exploring and addressing these concerns, the U.S. House of Representatives convened the Congressional Artificial Intelligence Caucus this year. The Caucus’ goal is to promote a greater understanding of AI’s potential, and to foster a legislative agenda to address Artificial Intelligence R&D needs and regulatory concerns.

Recognizing the growing need to provide legislators and regulators with the necessary technical expertise to expand technologically sound AI policies, IEEE-USA convened the Artificial Intelligence Ad Hoc Policy Committee. The AI Ad Hoc Policy Committee published its first IEEE-USA position statement on AI in 2017, laying out a framework to ensure appropriate safeguards and protections are in place, as the United Stated develops and uses this fast-moving technology. The framework addresses the following issues.

Research and Development

Greater federal investment in AI research and development (R&D) is essential to stimulate the economy, maintain U.S. competitiveness, create high-value jobs, and improve government services. The recent acceleration of successful AI applications makes it timely to focus R&D investment in more capable AI systems, but also to maximize societal benefits and mitigate any associated risks.

Presently, the NITRD (Networking, Information Technology, Research and Development) National Coordination Office (NCO) coordinates all federally funded R&D in robotics and intelligent systems (RIS). RIS R&D focuses on advancing physical and computational agents that complement, expand, or emulate human physical capabilities or intelligence. Examples include robotics hardware and software design, application and practical use; machine perception; intelligent cognition, adaptation and learning; mobility and manipulation; human-machine interaction; distributed and networked robotics; increasingly autonomous systems; and related applications. FY16 RIS funding was $225 million, or 5 percent of the NITRD budget. The planned RIS investment in FY17 is $220.5 million, a decrease of $4.5M. Given the potentially significant societal benefits from AI R&D investment, the federal government should greatly increase — not decrease — AI R&D funding.

While the United States remains the innovative leader, it is not alone in AI R&D. Intense international competition for AI supremacy exists. Japan is well known for using Fuzzy Logic AI to control national railways. The robotics industry is more important in Japan, than any other country; it employs more than 250,000 industrial robotic devices. Established in 1982, the European Coordinating Committee for Artificial Intelligence (ECCAI) coordinates AI R&D in Europe, and promotes AI study, research and application. In addition to programming AI to operate in conventional digital computers, the EU is also investing heavily in building artificial brains (i.e., neuromorphic computing) to implement AI. Chinese technology companies such as Baidu, are also investing heavily in AI technology. China wants to be an artificial intelligence world leader, due to AI’s strategic importance for national security and economic growth.

China also has a massive market for AI adoption. MIT Technology Review cited Baidu’s AI work in speech recognition for its low error rate. Prodigious venture capitalist investment in China is sustaining AI R&D in startup companies. A dozen banking and insurance companies in China, as well as state lenders like the China Merchant Bank, are providing funding to help companies develop AI software, and to provide efficient services. Facing such competitors, the United States cannot afford to underfund AI R&D; or it will be left behind in AI technology innovation.

Workforce Development

If you are a U.S. IEEE member, particularly a member of IEEE Robotics and Automation Society, Computer Society, Computational Intelligence Society, or an IEEE-USA volunteer, you may already be working on AI. If you are not in an AI-related field, you might wonder, “Will my job be eliminated? Will a robot replace me? A recent study in Japan finds the concern about job loss and replacement, due to AI developments, is strongest among people older than 50. The concern is less pronounced among the younger generation, the “digital natives,” who have greater experience with digital computers in their everyday lives.

Meanwhile, the United States has conducted no such study. Consensus opinion among U.S. economists and labor experts is that robots will perform some jobs; while at the same time, emerging AI technologies will create new and different jobs. The ratio of job creation versus job loss is unknown. Historically, introducing advanced technologies has always created newer, but different jobs.

No doubt, AI developments will create jobs requiring knowledge and skill in science, technology, engineering and mathematics (STEM) (Notes 2, 3). AI’s extraordinary growth creates demand for knowledgeable personnel in AI, as well as related fields. Numerous public and private organizations have recommended increasing student and workforce knowledge in AI expertise.

Internationally, fierce competition exists for engineering talent with AI expertise, leading to a threat of the U.S. workforce losing its AI talent. Addressing workforce needs will help to ensure the United States maintains its technological competitiveness internationally, and provides a workforce that continues to acquire relevant future AI skills.

Private sector and academic stakeholders hold a clear consensus that effectively governing AI and AI-related technologies requires a level of technical expertise that the federal government does not currently possess. Effective governance requires additional experts able to understand and analyze the interactions among AI technologies, programmatic objectives, and overall societal values. Creating a public policy, legal and regulatory environment — allowing innovation to flourish, while protecting the public — requires significant AI technical expertise.

Safety, Risks, Laws and Regulations

Consumer adoption of AI depends on the public’s trust; their opinions about technology safety; and how it affects privacy, employment rates, and the economy. These factors will drive the AI policymaking agenda. The technology can both capture the imagination, as it does with self-driving cars; and inspire fear, such as generated in many science fiction entertainment scenarios. So, the public must understand the difference between AI reality and science fiction, if it is to accept and trust AI applications as an integral part of modern living. Acceptance and trust will develop only to the extent that the federal government addresses AI safety and privacy concerns, and helps individuals displaced by AI obtain alternative quality employment.

We are currently seeing a whole new generation of unforeseen autonomous system development, such as AI-controlled machines able to learn and make entirely independent decisions. Drones and self-driving cars are prime examples. Naturally, questions exist, such as: Are these autonomous machines safe? And If something goes wrong, is there a “kill switch” for humans to disable the machine?

Despite the technology benefits of technology removing the need for human effort, AI also presents many challenging safety issues that potentially, directly or indirectly, negatively impact economic prosperity and national security. Such AI system failures, such as the fatal crash of a Tesla vehicle operating in a partially automated driving mode, could set the technology back many years. Expectations are for the domestic AI market to grow rapidly, reaching $70 billion by 2020, according to Merrill Lynch/Bank of America. Access to foreign markets will depend on harmonizing international safety standards and regulations.

These risks are not limited to robotic autonomous systems. AI-enabled expert systems for data analysis also present potential risks for wrong decision-making, based on faulty analysis. For example, an AI algorithm may make an incorrect medical diagnosis; resulting in prescription treatment and undue patient suffering, even death. An incorrect stock trading decision may cause an investor to lose millions of dollars. The public must be aware of, and willing to, accept these risks in AI-enabled data analysis systems.

Achieving transparency, in designing and using a system, remains a challenge and a hurdle to adopting AI technology. We increasingly use AI technology to control critical infrastructures — ranging from the financial sector to the electric grid. AI safety considerations and risks vary considerably across domains; and federal agencies must establish an understanding of, and develop guidance, to promote, responsible adoption of these technologies, for public and industry acceptance. In addition, a clear understanding of the safety challenges and risks, the extent of potential threats, and vulnerabilities is critical, to formulate public policies and regulations.

AI has powerful implications for national/homeland security. The military is keenly aware of the potential to increase its capabilities, while reducing U.S. casualties. However, using AI to support autonomous weapons is controversial. Further, AI may cause an international arms race, and global instability, especially when combined with robotics. Just as with other powerful weapons, certain international agreements may be necessary to appropriately shape development and use.

In the civilian sector, domestic deployed AI-enabled systems used for public safety purposes, may be vulnerable to unauthorized access by people or governments harboring malicious intent, both foreign and domestic. It is important to note that safety is considered differently in civilian and military domains, and should be addressed separately.

Artificial intelligence has the potential to affect just about everything in our society. High-profile examples of AI include autonomous vehicles (such as drones and self-driving cars), medical diagnosis, creating art (such as poetry), proving mathematical theorems, playing games (such as Chess or Go), search engines (such as Google search), online assistants (such as Siri), image recognition in photographs, spam filtering, predicting judicial decisions, and targeting online advertisements.

Some researchers and scientists worry that artificial intelligence will spin out of control. However, leading AI researcher Rodney Brooks writes, “I think it is a mistake to be worrying about us developing malevolent AI anytime in the next few hundred years. I think the worry stems from a fundamental error in not distinguishing the difference between the very real recent advances in a particular aspect of AI, and the enormity and complexity of building sentient volitional intelligence.”

What’s your opinion about artificial intelligence, its impact on American society, and the future of the US job market? Let us know…we’d love to hear what you think…

Notes

- IEEE-USA, “Artificial Intelligence Research, Development, and Regulation”, 2017. https://ieeeusa.org/wp-content/uploads/2017/07/FINALformattedIEEEUSAAIPS.pdf

- J. Holdren, and M. Smith, “ent, National Science and Technology Council, 2016. https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf

- Stanford University invited leading thinkers from several institutions to begin a 100-year effort to study and anticipate how the effects of artificial intelligence will ripple through every aspect of how people work, live and play. “Artificial Intelligence and Life in 2030,” One Hundred Year Study on Artificial Intelligence, 2016. https://ai100.stanford.edu/2016-report.

Clifford Lau is a member of the IEEE-USA AI Ad Hoc Committee, the IEEE-USA Research & Development Policy Committee, and is a Life Fellow of the IEEE.